Back in november, I hosted a talk at SoftShake. Like mentioned in my feedback post, I included some live-coding during the session. For the record, I’d like to share the set of tools I used to be comfortable doing this Robot Framework live-coding on my Mac.

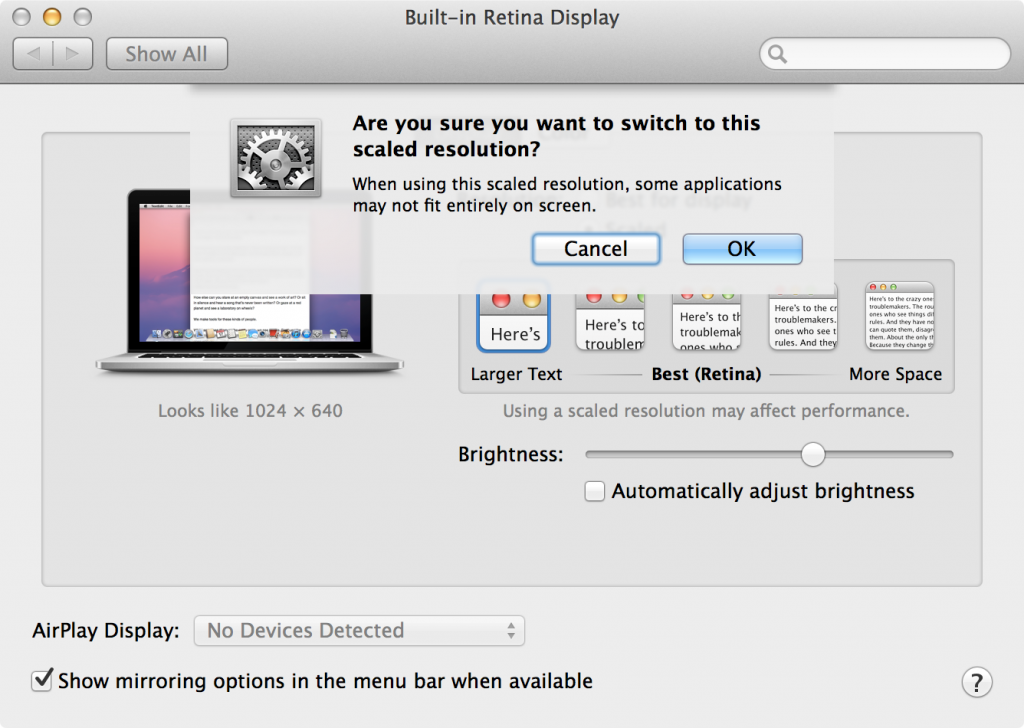

1) Screen resolution: once plugged to the projector, the resolution available suddenly become very low. So it is important to rehearse the session using this low resolution, otherwise it will be very disturbing on the actual day. Choosing the largest display in Preference should get a resolution close to the projector if we don’t have access to one.

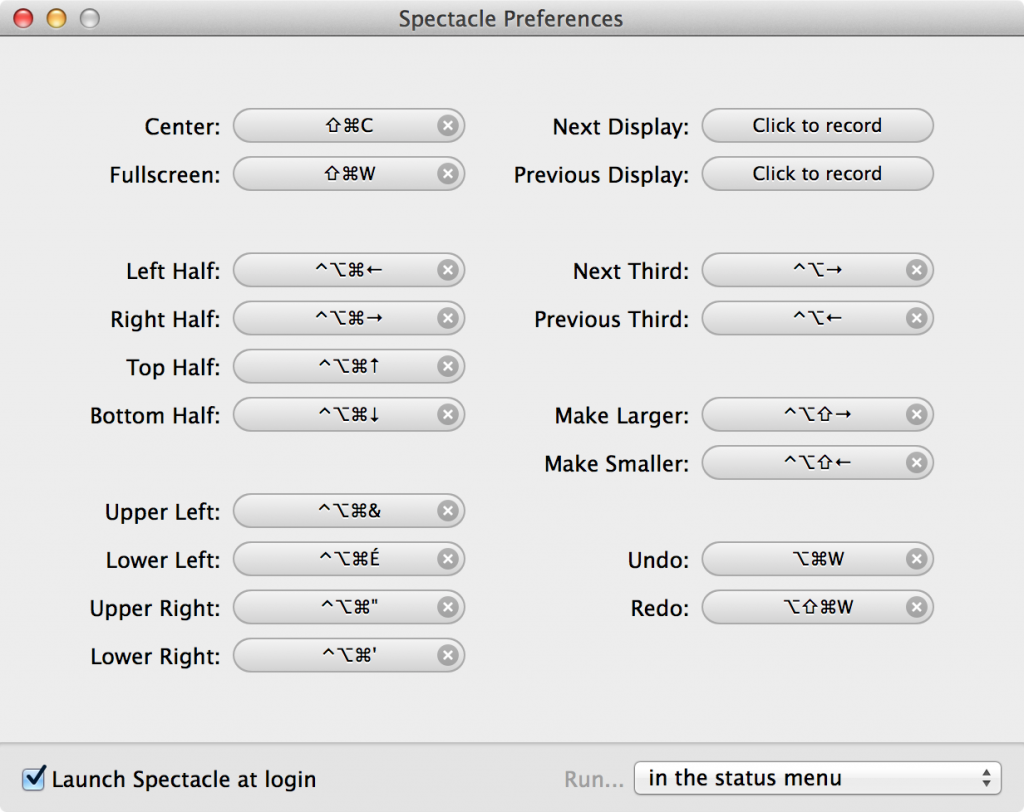

2) Window manager: I use Spectacle to quickly change windows arrangement setup. There are keyboard shortcuts to display windows on full, half or quarter of the screen. Once you get used to it, you can very quickly set 4 different windows in a couple of seconds on the screen.

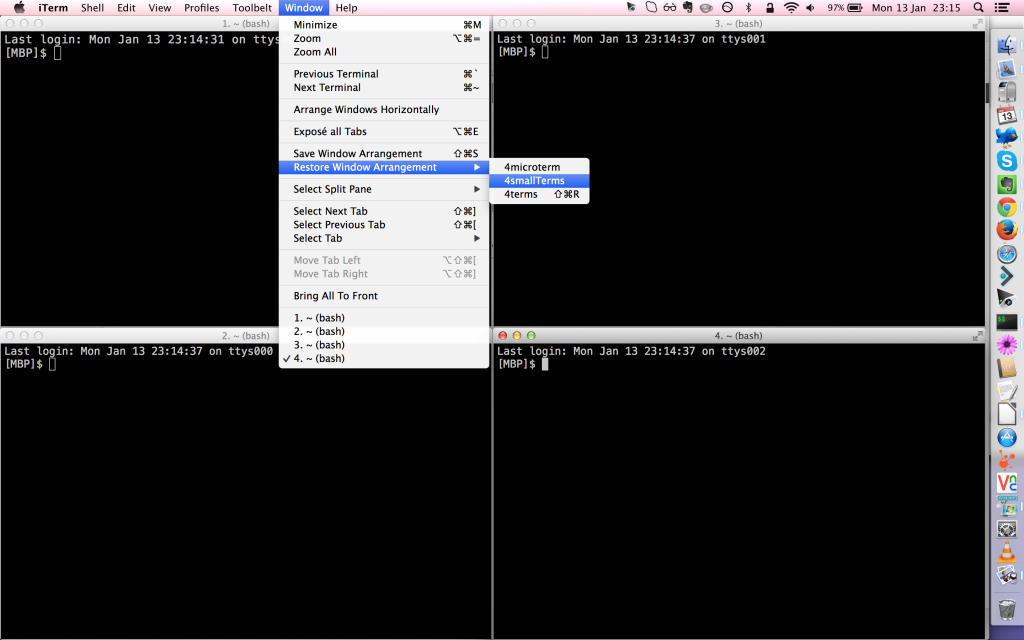

3) Terminal : I use iTerm2. In order to quickly get 4 small shell windows, I place them on the screen using Spectacle and save the window arrangement in iTerm2. Then I can associate a keyboard shortcut to this arrangement to get it very quickly during the presentation.

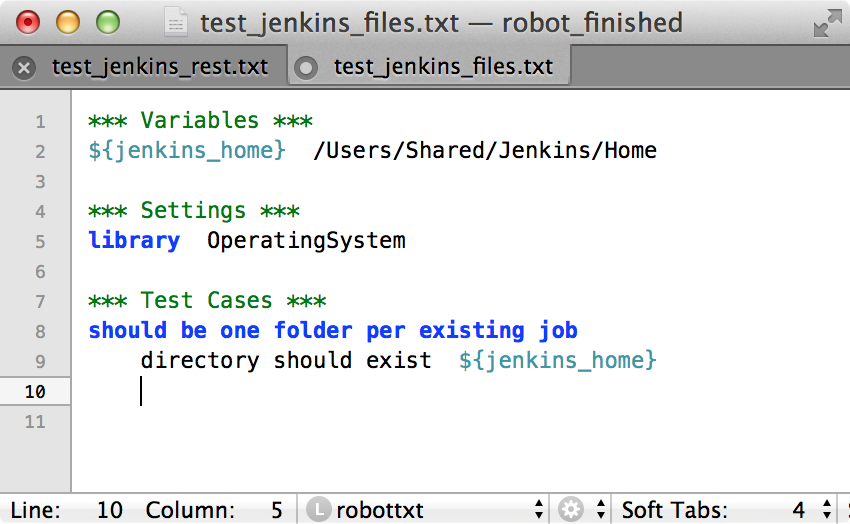

4) Text Editor. I use Textmate 2 (pre-built version here) with the Robot Framework Bundle. The bundle offers some keyboard shortcuts like *s⇥ which insert the *** settings *** header and nice color highlighting . There is no keyword completion though…

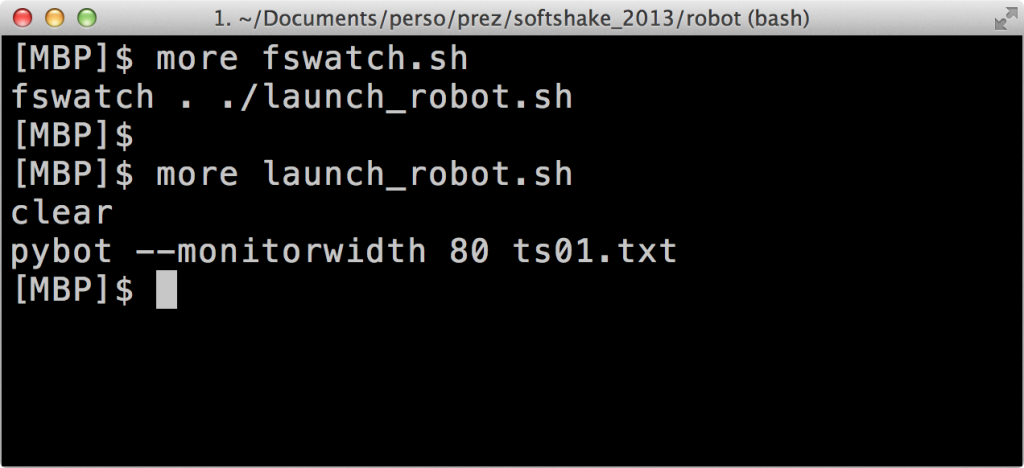

5) Live execution of the test. I wanted to obtain an effect similar to infinitest plugin with which each time a change is made on the source code, Infinitest runs the tests in the IDE. So I installed fswatch to run a script every time a directory content would be modified (i.e. every time my test would be updated) and created a small launch_robot.sh script that would be launch in such an event (script does clear + launch Robot).

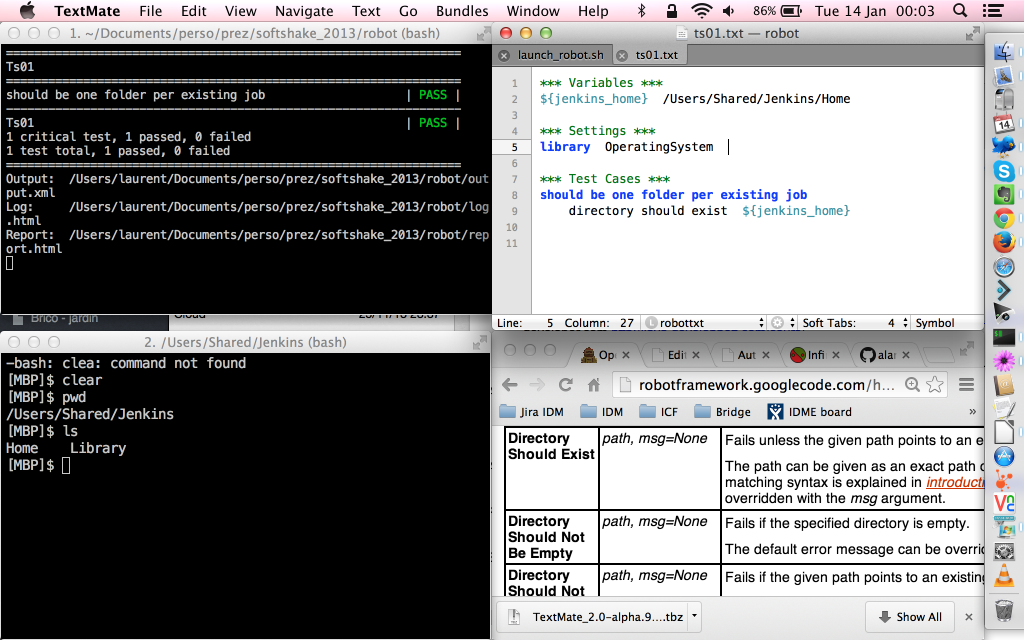

So, I chosed to display 4 windows during the live-coding:

1) top-left: live-execution of Robot Framework

2) top-right: source code of the Test modified live

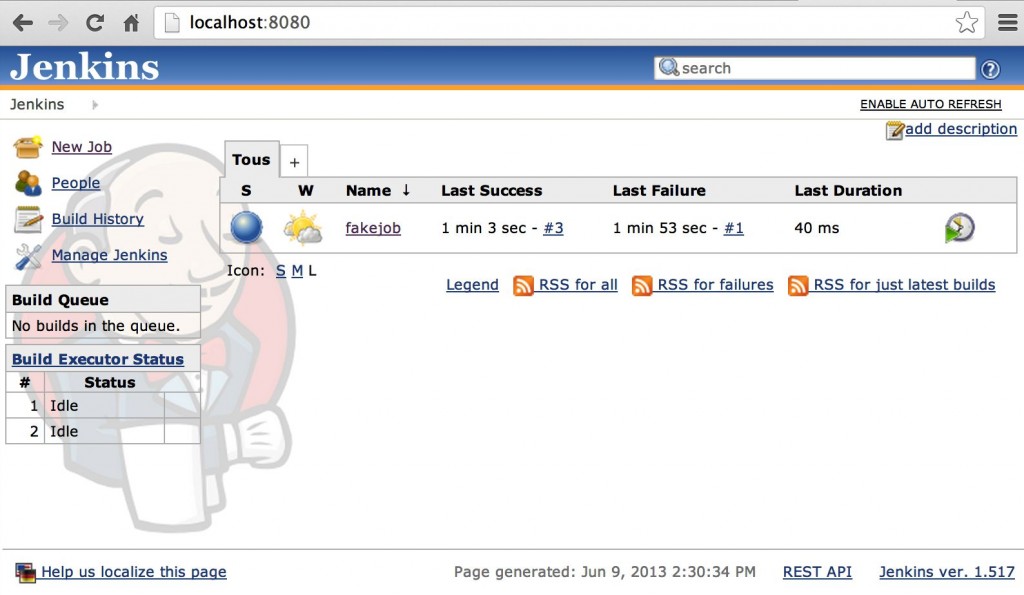

3) bottom-left: Software Under Test. Jenkins for this session (either file structure or the UI)

4) bottom-right: web browser with Robot Framework Library documentations to show the keywords I used in my code.

During the session, the test regularly went from green to red when I added a not-yet-created keyword or used a keyword in a library I forgot to import. So the idea was to save the file as often as possible to see the result change color at the same time.

An idea for a future session could be to do the full BDD-TDD test-code-refactor cycle:

– show a spec of dev to do

– write the failing functional test with Robot

– write the failing unit test in Java with any unit test framework

– write the minimal code to get the unit test green

– refactor the code and the test

– launch the functional test and update the code to make it green if it is still red

This could even be performed by 2 people: one QA and one dev… Will see if I ever try this!